Apple makes its next move. According to a new report covered by TechCrunch, citing Mark Gurman of Bloomberg, the company is developing three new AI wearables designed to give Siri something it has never truly had before: real-time visual context.

The aim is not to create another standalone gadget to compete with the iPhone, but quite the opposite. Apple wants these devices to act as the eyes and ears of its ecosystem, feeding information back to the iPhone, where everything would be processed through Apple Intelligence. Rather than replacing the screen, the idea is to surround it.

Smart glasses (without a screen)

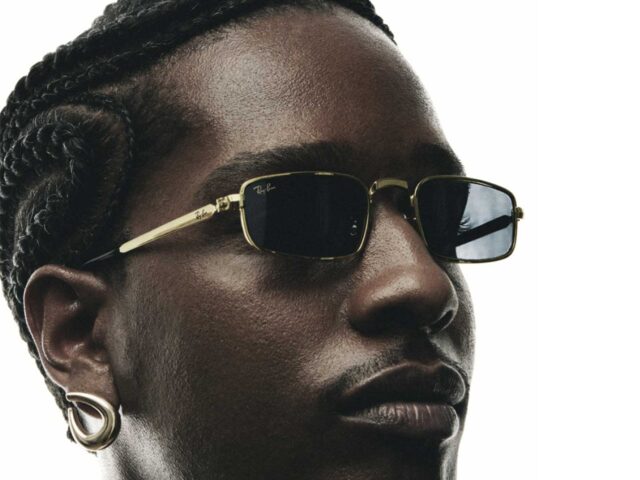

The most eye-catching project is a pair of smart glasses, internally codenamed N50. Unlike the Apple Vision Pro — bulkier and clearly geared towards spatial computing — these glasses would not include a display at all.

Their approach would be closer to that of the Ray-Ban Meta glasses, featuring integrated cameras, microphones and speakers to interact with Siri via audio. The key difference is that the heavy processing would be handled by the connected iPhone, helping to keep the design lightweight and improve battery efficiency.

The experience would be simple and intuitive. Look at something, ask a question and receive an answer without taking your phone out of your pocket. Quick photos, queries about what is in front of you or contextual assistance. No display, no distractions, everything running quietly in the background.

AirPods with cameras (but not for taking photos)

It may sound unusual, but the report also mentions AirPods equipped with low-resolution infrared cameras. They would not be designed to capture images in the traditional sense, but to provide spatial and environmental data to the AI. This could enable gesture controls or greater spatial awareness. Once again, the goal is not to create a standalone device, but to supply the iPhone with more contextual information.

A camera-equipped pendant: functional minimalism

The third device would be a small pendant, roughly the size of an AirTag, designed to be clipped onto clothing or worn as a necklace. Its role would mirror that of the glasses, acting as a passive visual sensor. According to the report, Apple is keen to avoid repeating the mistakes of previous standalone AI gadgets by ensuring these products function strictly as iPhone accessories rather than replacements.

A strategic shift in Apple’s AI approach

Instead of packing ever more powerful processors into new hardware formats, Apple appears to be pursuing a different strategy: using wearables as data-capture layers while keeping the iPhone as the central brain.

With its vast installed base, the move makes sense. Rather than pushing users towards an entirely new category of device, Apple could offer lighter, more accessible and more wearable entry points into the Apple Intelligence ecosystem.

That said, nothing is imminent. The report suggests production of the glasses could begin in December 2026, with a potential launch in 2027. The camera-equipped AirPods and pendant would likely follow a similar timeline, although plans may still evolve.

For now, one thing is clear. Apple does not just want you to talk to Siri. It wants Siri to look at the world with you. And that shifts the dynamic entirely.

Sigue toda la información de HIGHXTAR desde Facebook, Twitter o Instagram

You may also like...